I am currently a fourth-year PhD student at ISTAustria, supervised by Dan Alistarh, as well as a second-time intern at Google DeepMind. Overall, my research is focused on making massive machine learning models more efficient.

In 2022, I developed the first successful low-bit quantization and sparsification methods for extremely large language models, GPTQ and SparseGPT. In 2023, I identified scaling laws for sparsely-connected foundation models, and built QMoE, a tool for efficient compression and execution of trillion-parameter Mixture-of-Expert models in limited-resource settings. Most recently, I implemented Marlin, the first INT4xFP16 LLM inference kernel with near-ideal speedup at medium batchsizes.

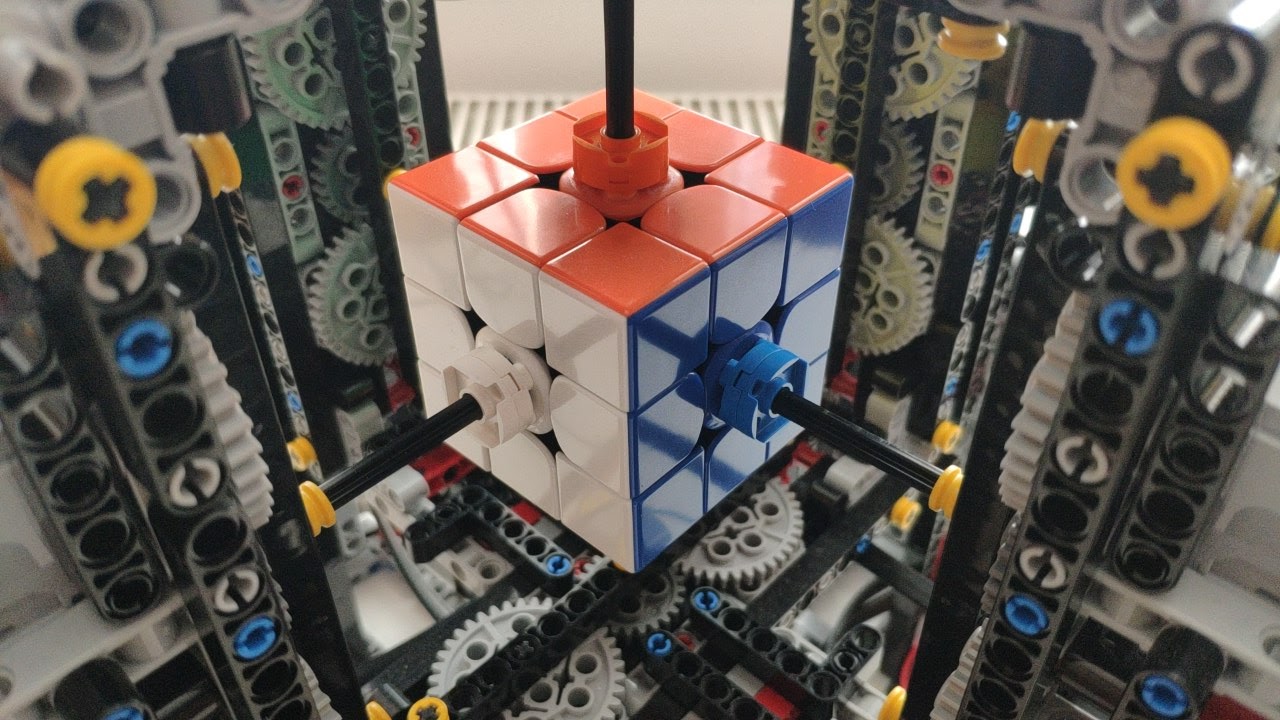

In my free time, I love creating super fast Rubik's Cube solving robots, some of which have beaten long-standing records and collected millions of views on YouTube.

In 2022, I developed the first successful low-bit quantization and sparsification methods for extremely large language models, GPTQ and SparseGPT. In 2023, I identified scaling laws for sparsely-connected foundation models, and built QMoE, a tool for efficient compression and execution of trillion-parameter Mixture-of-Expert models in limited-resource settings. Most recently, I implemented Marlin, the first INT4xFP16 LLM inference kernel with near-ideal speedup at medium batchsizes.

In my free time, I love creating super fast Rubik's Cube solving robots, some of which have beaten long-standing records and collected millions of views on YouTube.

Highlighted Work

GPTQ (ICLR 2023):

- The first quantization method able to accurately compress massive LLMs to 4- or 3-bit precision.

- The first open-source GPU kernel demonstrating major generative inference speedup with standard weight-only quantization.

- Supported by various popular libraries: HuggingFace's transformers, NVIDIA's TensorRT-LLM, Intel's neural-compressor.

- 1.5k+ stars on GitHub; with popular forks AutoGPTQ (3k+ stars) and GPTQ-for-LLaMa (2.5k+ stars).

SparseGPT (Oral, ICML 2023):

- The first algorithm able to accurately induce significant sparsity in 100+ billion parameter models.

- Featured by Communications of the ACM and national television.

- Invited talks at Apple, Amazon and Google.

- 500+ stars on GitHub.

Rubik's Cube Robots:

- 10+ million views on Youtube; also presented live on BBC.

- Cuboth: the world's fastest robot to solve an unmodified Rubik's Cube, beating the previous record, which stood for 7 years, by 2x, while using equivalent hardware.

- rob-twophase & qphase: the current best computer solving algorithms; also the first to directly take into account robot mechanics during the search process.

- SquidCuber: the first machine made entirely out of Lego to solve a cube in a single second on average (2x faster than the 5-year-long-standing previous record).